SDRAngel driven by Ollama LLM using MCP

SDRangel operated by Ollama Local Language Model using the Model Context Protocol.

Info @ joopk@hccnet.nl

Experiment using an AI Local Language model to control and operate the sophisticated signal analyzer front-end SDRangel. Controlling SDRangel while Talking/typing to it in natural language was the goal of this experiment. The system use different components which will be discussed/shown in this Wiki article.

Using AI in radio amateur world is a topic which was also discussed on the 49th International Amateur Radio Exhibition in Friedrichshafen. A lecture about AI Agents and Reasoning models was given by Jochen Berns, DL1YBL. This inspired me to experiment with MCP servers and Ollama trying to see how AI can help operate a rather sophisticated program like SDRangel. Client requests like "Setup Pluto on channel 10 to transmit to QO-100 on 333 kilosamples", or "I would to listen to Radio Veronica on FM band, setup best parameters" or "Scan 20 meter band SSB mode and show map", or "increase volume" as possible client requests.

Main components : SDRangel, Ollama, Node-Red, UI Dashboard 2 Chat.

Installed on Windows-11 laptop with WSL2 Ubuntu flavor (24.04).

Hardware recommendation Due to LLM 16 Gbyte memory

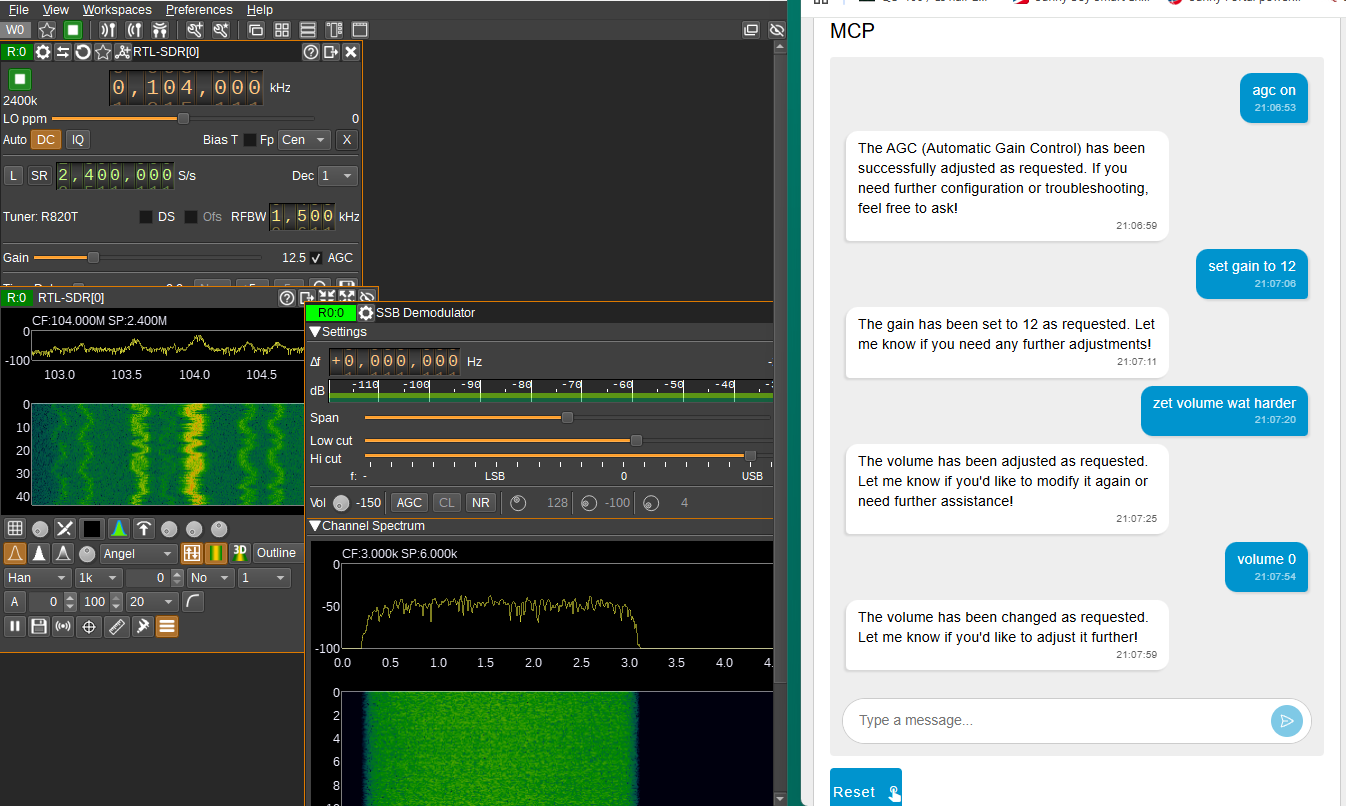

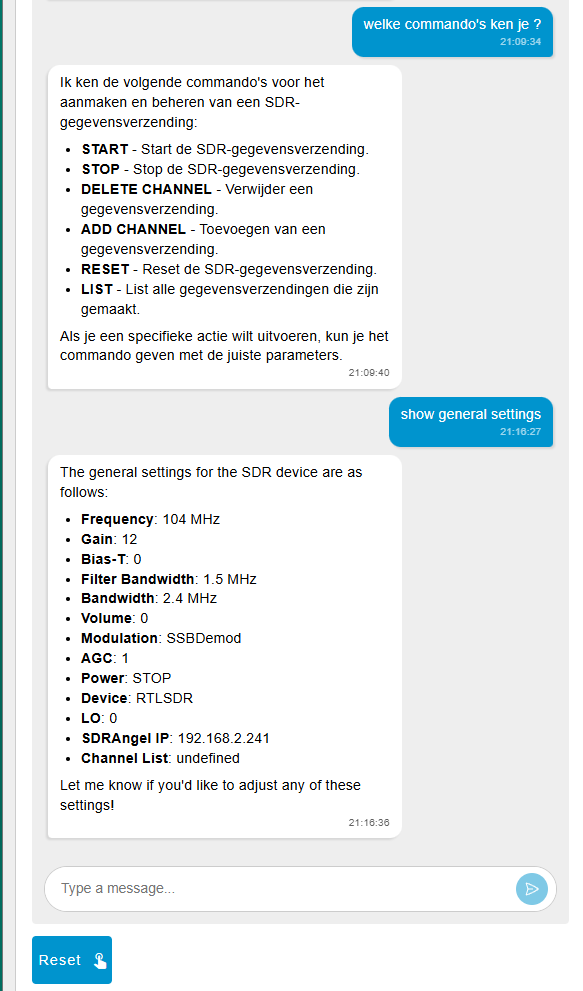

Example of simpel client commands to SDRangel:

Client interaction example :

Detailed explanation and system flows will be discussed here. Following components are used :

- Node-Red in Windows

- Ollama-mcp-bridge in Ubuntu

- mcp-proxy in Ubuntu

- Ollama with model Qwen3:1.7b in Windows

- SDRangel in Windows

High level process:

The MCP bridge connect to MCP server provided by Node-Red (mcp-server node), and collect all published tools and provide this information to Ollama. Node-red Chat ( Dashboard-2 UI Chat node) client type a question or command in natural language. Chat completion request is send to the bridge, the bridge forward to Ollama. If the Language model decide to call the published tools node-red MCP server node will receive it and specific operation can be performed for example sending an API command to SDRangel. Feedback of the MCP server response will be forwarded again to Ollama LLM and formulate an answer or summarize the action performed and return it to the client.

Specific and examples :

The MCP relay configuration is calling the MCP server running in Node-red. As this is a SSE oriented server and the bridge needs an "STDIO" communication the mcp-proxy is used. Therefore the JSON config for the bridge is simpel

{

"mcpServers": {

"mcp-proxy": {

"command": "mcp-proxy",

"args": [

"http://ip-address-node-red:8001/mcp",

"--transport=streamablehttp"

]

}

}

}

Node-red mcp server have a specific registry node which will tell the LLM in what format it can request the server to provide more information. It is called a "JSON Schema". This is also a JSON file. The LLM will parse this file and as it also contains a description what a specific entry is repsonsible for the LLM decide what to return to the MCP server.

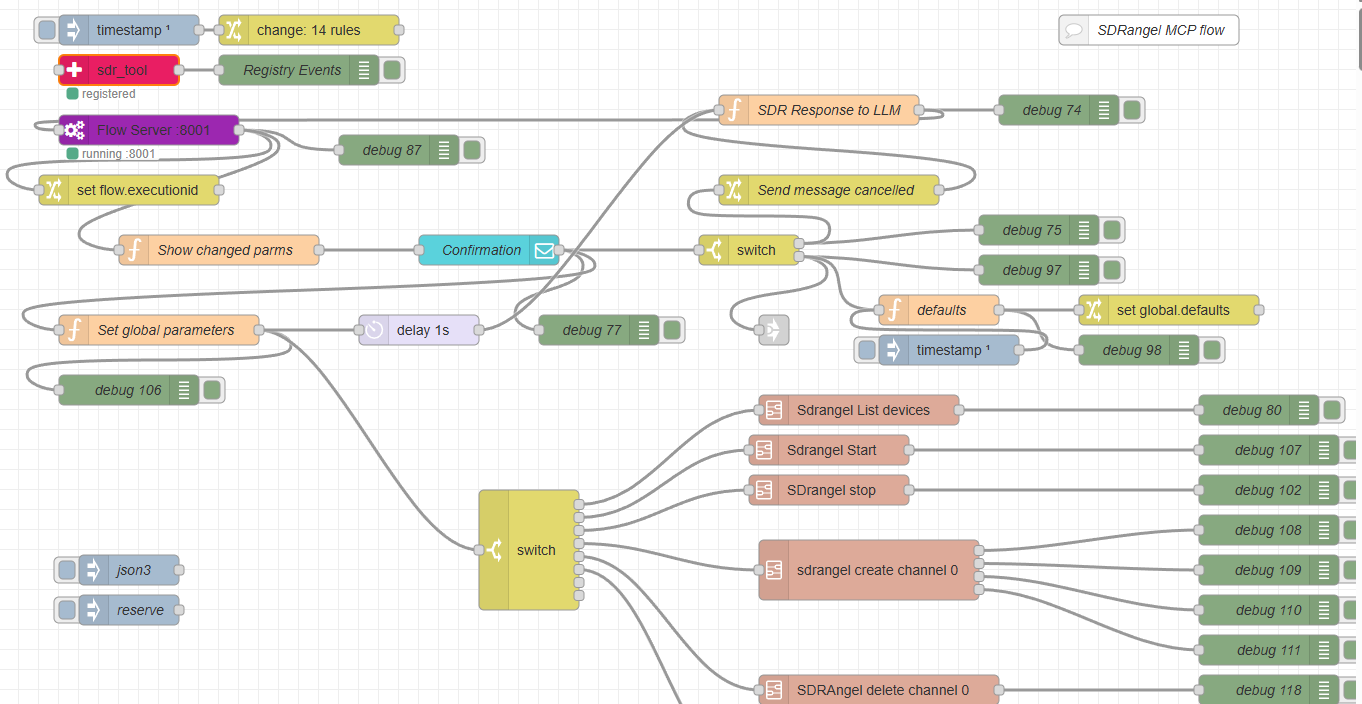

The Node-Red flow. The Red and purple nodes belong to the MCP server. The brown nodes are the API calls to SDRangel to make changes to the settings. Currently only 1 channel is implemented as proof. The other nodes are for default settings en a "man in the middle". As sometimes LLM decisions are not correct we need to verify which changes it is going to execute. Therefor a notification window will pop up asking you/me to approve or cancel the change requested by the LLM. The LLM can set all parameters at once or one per client request. It depends what is asked. If I ask "change freq to 100 Mhz and set gain to 12 Db" it will change 2 parameters for the current control set for SDRangel. If I ask "I would like to listen to commercial Braodcast Station Veronica , set optimum parameters" the LLM might set 5 or 6 parameters at the same time. As not always correct the "man in the middle" (you) can decide to execute or not.

The flow contains may debug (green nodes) to check it's payload input. Experiments can be done with other models with more parameters or models in the cloud which are MCP compatible As this system is quite flexible it has the potential to become quite powerfull. The Ollama local LLM with 1.7b Qwen model is quite responsive, but it can take a second before the tokens are written when it needs to report the status or a list of commands. The results are not always 100% but with fine tuning and create perhaps some pre-sets which can be called from the LLM if he knows that they exist would make it very easy to operate. If you are interested and have ideas let me know.

'73,JKO

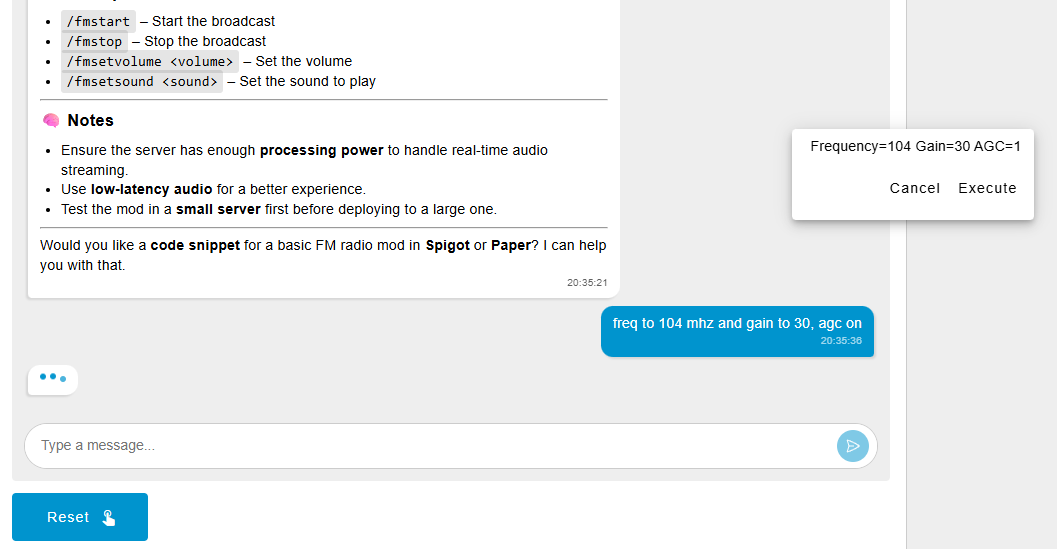

Example of the "man in the middle" confirmation popup what will be changed according to the knowledge of the LLM. The blue chat window was my request, the popup appears in the right top corner with the buttons "Cancel or Execute".